Apple glasses change the rules of the game. Each surface will be a touch screen

You come home. You enter the code to the alarm on the touch panel hanging on the wall. You go to the living room and on the next screen you select a preset lighting scene just for watching a movie. One more click on the screen and the machine starts making coffee. In practice, however, none of these screens exist. Only you can see them with your Apple glasses.

Apple glasses can have more uses than we think. Initially, it seemed that the Apple Glasses would only show notifications and perhaps simple information, e.g. about the weather.

Later, we found out that Apple is working on a new display technology on iPhones and iPads. The screens of these devices are to be visible only to the owner wearing Apple Glasses . Bystanders would see a black screen.

Now it turns out that Apple has patented the idea for a completely new use of glasses. The equipment is designed to turn any surface into a touchscreen.

Any surface can be a touchscreen as long as you wear Apple Glasses.

This idea has been patented by Apple, which at the moment only means that Apple is considering such a function. So far, there is no confirmed information that Apple's glasses will be equipped with this technology.

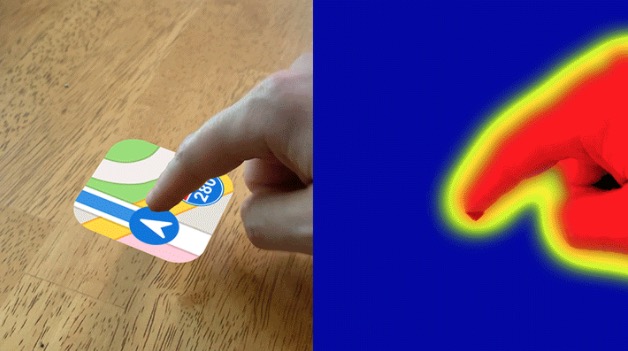

Nevertheless, such a system would make sense, and if someone could implement it effectively, Apple is such a company. The patent describes the operation of the system, according to which the glasses should distinguish elements of the environment, detect flat surfaces and read finger movements on these surfaces. The system is based on the temperature difference between the user's finger and the surface.

After touching the table surface with your finger, the temperature at the point of touch increases slightly. A thermal imager would show some thermal trace. On this basis, the glasses are supposed to detect whether we have touched a given surface.

And why such a system could be implemented by Apple?

The whole industry of augmented reality is only in its infancy, but it is Apple that has the greatest utility successes in it. Only Apple has managed to implement an advanced environment recognition system in consumer devices. I mean the lidar from the iPad Pro, which is also to hit this year's iPhones. Lidar detects the environment very accurately in 3D and enables extremely precise operation of the AR application.

Let's also look at the portrait mode in iPhone cameras. This one is also extremely effective in detecting subsequent plans in photos, creating a reliable simulation of blurring the background in the background. Many manufacturers have a similar system in their smartphones, but few blur the background as effectively as Apple. The power of Apple's system is also shown by applications that control the appearance and area of blur, such as Focos.

Apple has a lot of experience in analyzing the distribution of objects in three-dimensional space. Until it begs to apply this experience with glasses.

For now, however, it is not known when Apple glasses may hit the market. For now, we only know that the production of a test batch of glasses has started , but the tests themselves may take up to 2-3 years. The new product category requires long preparations.

And although none of the leaks are officially confirmed, each new piece of information makes me even more excited about Apple glasses. I believe it will be "the next big thing" in the world of technology. I had high hopes for Google Glass, but this project didn't work out. Perhaps it was too early for such a product. However, if Apple glasses hit the market, they have a great chance of being successful. This was the case with Apple Watchem and later with AirPods headphones.

Don't miss out on new texts. Follow Spider's Web on Google News .

Apple glasses change the rules of the game. Each surface will be a touch screen

Comments

Post a Comment